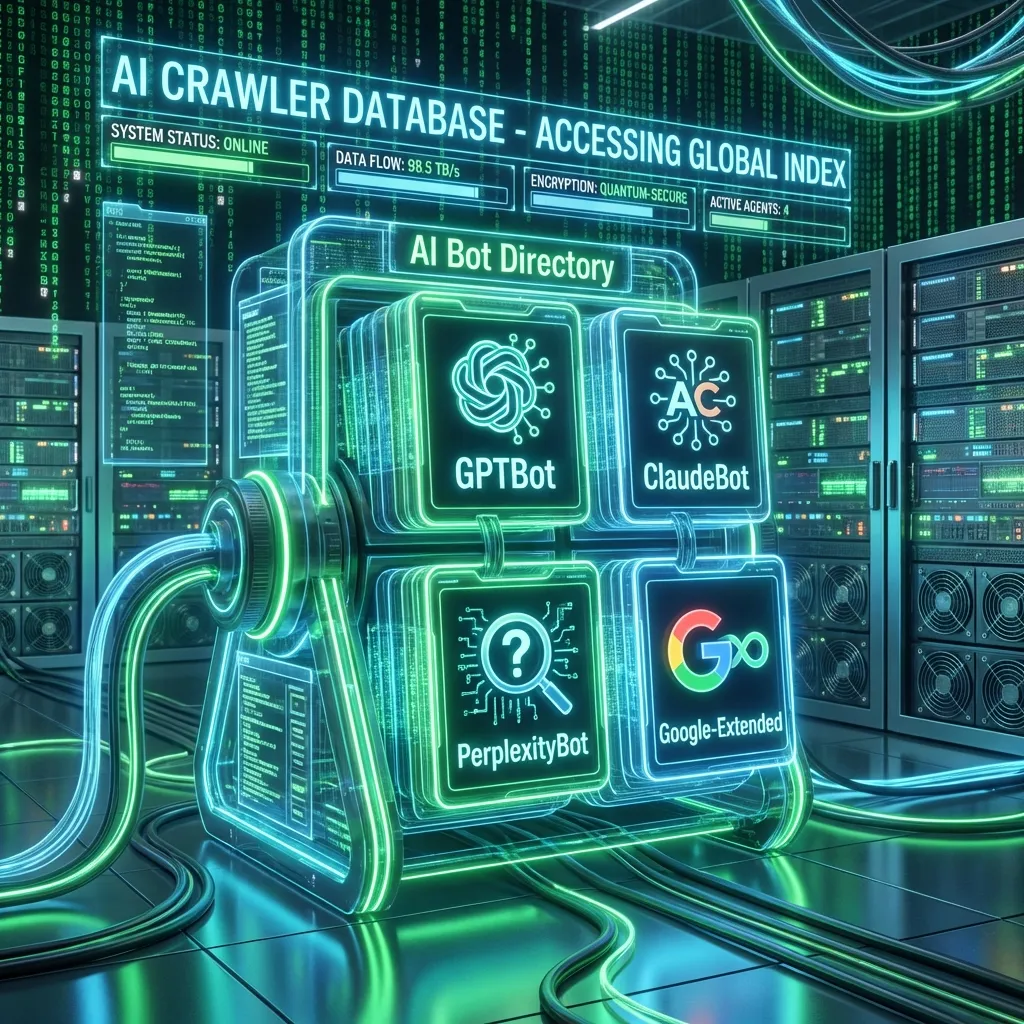

The AI Bot Directory 2026: Who is Crawling Your Site?

In 2026, your website is being visited by two types of visitors: Humans and Machines.

Understanding the Machines (AI Crawlers) is now a critical part of Technical SEO. If you block the wrong one, you disappear from search. If you allow the wrong one, your proprietary data fuels your competitor's LLM.

Here is the definitive ViaMetric AI Bot Directory.

The Leaders

1. GPTBot (OpenAI)

- Purpose: Training data for future models (GPT-5, etc).

- User-Agent:

GPTBot - Behavior: Respects robots.txt. Crawls massively.

- Recommendation: Block if you have proprietary data (paywalls, user content). Allow if you want to be part of the "world knowledge."

User-agent: GPTBot

Disallow: /

2. ChatGPT-User (OpenAI)

- Purpose: Live browsing for ChatGPT users.

- User-Agent:

ChatGPT-User - Behavior: Fetches pages only when a user asks a specific question that requires browsing.

- Recommendation: ALWAYS ALLOW. Blocking this means ChatGPT cannot read your site to answer a user's question, killing your traffic.

3. PerplexityBot (Perplexity)

- Purpose: Real-time answer engine indexing.

- User-Agent:

PerplexityBot - Recommendation: ALWAYS ALLOW. Perplexity is a massive traffic driver. Being indexed here is critical for GEO.

4. ClaudeBot (Anthropic)

- Purpose: Training and Retrieval for Claude models.

- User-Agent:

ClaudeBot(formerlyClaude-Web) - Recommendation: Treat similar to GPTBot.

5. Google-Extended (Google Gemini)

- Purpose: Training data for Gemini/Bard.

- User-Agent:

Google-Extended - Note: This effectively allows you to block your content from being used to train Gemini without removing it from Google Search (which uses

Googlebot).

The "Silent" Scrapers

Not all bots play nice.

- CCBot (Common Crawl): Used by almost every AI company for training data. If you block GPTBot but allow CCBot, OpenAI still gets your data eventually.

- Bytespider (ByteDance): Very aggressive crawler for TikTok/Doubao.

How to Audit Your Robots.txt

Your robots.txt file is now the gatekeeper of your AI strategy.

Common Mistake:

User-agent: *

Disallow: /

This nuclear option kills SEO and GEO.

The GEO-Optimized Strategy:

# Allow Search Engines & Answer Engines

User-agent: Googlebot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: ChatGPT-User

Allow: /

# Block specific training bots (Optional)

User-agent: GPTBot

Disallow: /private-data/

Want to test if you are blocking the wrong bots? Use our free Robots.txt Inspector.

Davide Agostini

Android Mobile Engineer and Founder of ViaMetric. Davide specializes in technical SEO and the emerging field of Generative Engine Optimization (GEO), helping founders navigate the shift from links to AI citations.

Frequently Asked Questions

- What is GPTBot?

- GPTBot is OpenAI's web crawler used to fetch data for training future AI models like GPT-5. It is distinct from 'ChatGPT-User', which fetches live data for current user queries.

- Should I block AI bots?

- It depends. Blocking 'Training Bots' (GPTBot) protects your copyright but might reduce long-term model knowledge of your brand. Blocking 'Live Bots' (ChatGPT-User) directly hurts your visibility in current search results.

- What is the User-Agent for Perplexity?

- Perplexity uses `PerplexityBot`. To allow it, add `User-agent: PerplexityBot` followed by `Allow: /` in your robots.txt.